[T]here's a widespread belief that existing social media platforms suck, and that we need better ones. And this is true for different reasons: centralized and arbitrary censorship and manipulation, low quality of discourse, and of course the concept of engagement, and the kinds of engagement that are favored are very misaligned with quality.

-- Vitalik Buterin, EthCC 2021: Things That Matter Outside of Defi

If you've ever talked about politics, fandoms, cryptocurrencies, or anything else remotely controversial, you've probably had some discussions that felt unproductive, aggravating, or hostile. Insults are thrown around with impunity, entire fields of strawmen are put to the fire, and goalposts are launched into the atmosphere. Why is it that discourse on the internet can be so bad?

Here are some problems we've identified with online discourse (not even close to exhaustive):

- People are uninformed or underinformed

- People are disproportionately aggressive, hostile, and escalate with very little provocation

- The prevalence of "mob mentality", cancelling, public executions

- Censorship

- Low conversational investment

- Spam

- Optics vs. Truth

a. "safe" content that isn't valuable

b. engagement is not a good measure of quality - Harassment

What is it about online social media that causes these issues?

Many of them are noticeably absent from meatspace conversations (at least, in the author's estimation).

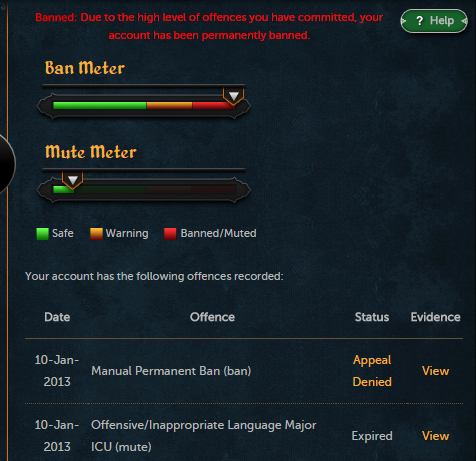

An almost ubiquitous lower-level issue is a lack of accountability for users of online social media. If a user is engaged in spam or harassment, typically the worst that can happen to them is that their account is deleted or banned. In particularly egregious cases, perhaps their IP address is blacklisted. For users with a large amount of social credit—popularity, followers, subscribers, etc.—this can be a significant consequence, and such users typically avoid such activity. For the rest of us (and especially those who are regularly spamming or harassing others), the cost of getting banned and creating a new account can be trivial.

Users of online social media may also find themselves forgetting the human, or, in the words of Reddit admin /u/cupcake1713 in this aptly-named Reddit blog post:

The internet is a wonderful tool for interacting with people from all walks of life, but the anonymity it can afford can make it easy to forget that really, on the other end of the screens and keyboards, we're all just people.

Users of online social media—of the internet in general—often gravitate toward informational echo chambers. This is compounded by the human tendency to reject conflicting information and worldviews, and is no doubt the cause of much political argument. This issue is further exacerbated by the way some social media platform algorithms can affect what content a user sees.

Researchers have observed that individual choice has the strongest impact on how ideologically diverse consumed media is, but platform algorithms play a part as well. In Facebook, for example, the amount of ideologically "cross-cutting" (i.e. conservative content for a liberal, liberal content for a conservative) content is reduced by 15% from algorithmic placement of the content. This divide, or even any divide that leads to distinct narratives, can lead to increasingly polarized communities. And because "the most frequently shared links [are] clearly aligned with largely liberal or conservative populations", social media platforms can foster such echo chambers ([source] [free source] (warning: FB link)).

On a different note, metrics of quality found on Web2 social media platforms are often a poor measurement for the actual quality of content.

Encourag[e] posts that look good one year from now and not just today, things that actually have long term value and that aren't just 'let's spin it out so it goes viral, we get some engagement'—and it turns out it's actually total junk.

-- Vitalik, EthCC 2021

This manifests in all large Web2 social media platforms, as all large Web2 social media platforms have features/metrics such as likes, shares, retweets, followers, subscribers, friends, comments, etc. These interact to form a complicated and literally sentient (well, probably) system of incentives.

People act differently, speak differently, because of observation alone, so observation along with a reward system? This leads to a sort of social media zeitgeist, a collection of (not necessarily compatible) ideas and ideals, that weighs on every social media post. If you satisfy the zeitgeist, you get extra likes and follows. But is this right? Social media is valuing this content, but is it actually valuable? Maybe, but often not in the way it's measured.

Another distinguishing feature of many types of online social media is audience. When you tweet something, or post on Facebook or Instagram, or even in Discord (or even in Status!) you may be speaking to hundreds, thousands, or hundreds of thousands of people. Commenting on a particular post is frequently also more a statement to the audience than a statement to the original poster. This interacts strongly with the issue of Optics vs. Truth: engagement (and thus validation) depends on popularity, social norms, rhetoric, all sorts of things. But it doesn't depend very much on truth. It also contributes rather directly to the issue of mob mentality.

Not to besmirch existing Web2 social media platforms (more than they deserve, at least): these are intricate and difficult issues, if not impossible. How do you "fix" the fact that many social media platforms involve communicating to a potentially large audience? How do you fix echo chambers, without resorting to authoritarianism? Is there a way to get users to invest more into their conversations and not block other users over disagreement, that doesn't infringe on their freedom? How can we increase the cost of account creation without impeding good faith users and violating privacy? What constitutes harassment? Is it an angry and insulting message? A death threat? What about a thousand of them? Is there anything a platform can do to combat the human tendency to reject conflicting worldviews?

One of the most realistic avenues for progress in these regards is to increase user accountability. As mentioned, this must be done carefully to avoid sacrificing privacy.

If account creation and sending a message are too easy, spam and harassment will occur. Web2 platforms have no problem collecting phone numbers, legal names, state-issued identification, etc., but that's because Web2 platforms don't value or prioritize your privacy. In fact, Web3 platforms don't even need to increase the difficulty of account creation (in Status, for example, it's free, doesn't require an email/phone, and takes seconds). The hurdle can be applied at the step of sending a message, instead. Web3 platforms and communities can require a stake of some cryptocurrency, a donation, or even ownership of an NFT to join. In the case of "staking", the tokens (or NFT) could be taken if the user is banned, disincentivizing some of the behaviors which lead to lower quality discourse.

We've discussed similar solutions in the context of spam prevention, here and here.

Community self-moderation is another way that we can increase user accountability, but ultimately such efforts depend on the cost of the consequences. Even if a community is effectively self-moderated, bans or other disciplinary action need to matter to the offending party to be an effective deterrent. So we come back to increasing the difficulty of account creation, or increasing the difficulty of sending a message.

The technology of the future will almost certainly beget social media platforms that solve problems that seem intractable today, just as crypto today is solving problems of the past. The solutions discussed in this article are by no means comprehensive, and we will all continue learning as we move forward. Much like early social networks, and even the early ecosystem of the internet, we have the fortune to contribute to an evolving universe of experimentation and evolution. We just also have some insights from what went wrong with Web2, so we can build a better internet (and one that doesn't rely on unbalanced incentives and exploitative financial models). Perhaps we'll even overcome the tendency to dismiss conflicting worldviews with some sort of cybernetically enhanced critical thinking.